-

Products

Embedded Computing

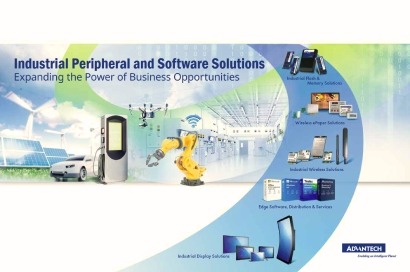

- AIoT Software, Distribution & Services

- Application Focus Embedded Solutions

- Arm-Based Computing Platforms

- Computer On Modules

- Digital Signage Players

- Edge AI & Intelligence Solutions

- Embedded PCs

- Embedded Single Board Computers

- Fanless Embedded Computers

- Gaming Platform Solutions

- Industrial Display Systems

- Industrial Flash & Memory Solutions

- Industrial Motherboards

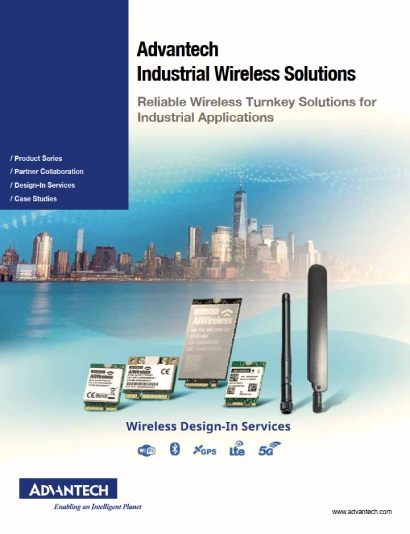

- Industrial Wireless Solutions

- Wireless ePaper Display Solutions

Applied Computing (Design & Manufacturing Service)

Industrial Automation & I/O

- Automation Controllers & I/O

- Certified Solutions

- Class I, Division 2 Solution

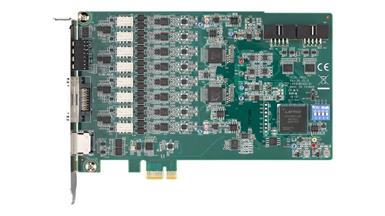

- Data Acquisition (DAQ)

- Embedded Automation Computers

- Gateways & Remote Terminal Units (RTUs)

- Human Machine Interfaces

- Motion Control

- Panel PC

- Power & Energy

- Remote I/O

- WebAccess Software & Edge SRP

- Wireless I/O & Sensors

Intelligent Connectivity

Intelligent Systems

- Box IPC

- CPCI & VPX

- Industrial Computer Peripherals

- Industrial GPU Solutions

- Industrial Motherboards & Slot SBC

- Intelligent Transportation Systems

- Modular IPC

- Rackmount IPC

Cloud, Networking & Servers

- Industrial, Telecom and Cloud Servers

- Network Interface & Acceleration Cards

- Network Security Appliances

- SD-WAN & uCPE Platforms

- WISE-STACK Private Cloud

Computer Vision & Video Solution

-

Solutions

Solutions

- Services

-

Partners

Business Alliance Partner

- Support

- Corporate

- Contact

Visit the Advantech Global website, or choose your country or region below.

Africa & Middle East

MyAdvantech Registration

Resources

In the Resources section, you can catch up on information about industrial news, insights, and the latest technology from Advantech's point of view.

No matches found with your selected filters.

Please try again by resetting and choose other criteria.

How Can We Help?

About Advantech

Resources

Solutions

We're here to help!

Tell us about your issues so we can get you to the right people, as soon as possible.

- © 1983-2024 Advantech Co., Ltd.

- Site Map

- Privacy Policy

- Do Not Sell My Personal Information

.jpg)